As Artificial Intelligence continues to advance, faculty and staff of academic institutions adapt to the technological revolution.

Northwestern State University of Louisiana faculty and staff are adapting to the technological advancement of AI through research, education and the implementation of official policies.

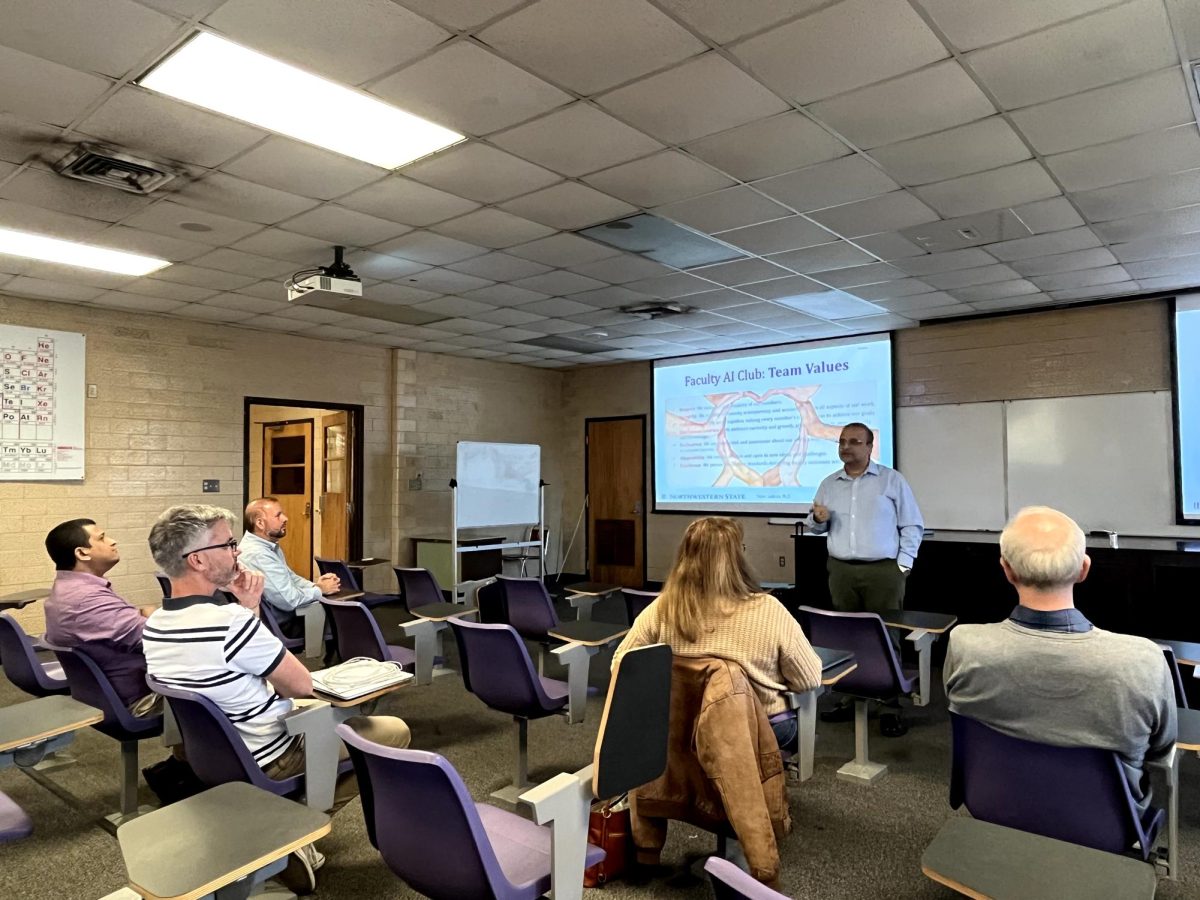

The Artificial Intelligence Club is an organization of NSU faculty and staff working to educate its members on topics regarding AI.

Nabin Sapkota, associate professor of engineering technology, is the acting head of the AI Club.

“I was trained by some professionals in AI last semester, and I am trying to get that training and give it to our family here,” Sapkota said. “I’m not trying to make them experts in Python programming, but we should understand what the program says like a basic foundation.”

Sapkota explained that the purpose of the AI Club is to ensure that NSU faculty members are knowledgeable on AI and can serve as proper AI education providers to students.

“I hope we will be good at delivering the needs of today and tomorrow because, if we’re only acting now, it is already late,” Sapkota said.

He shared that the specific goals of the AI Club currently include: Securing a National Science Foundation grant to fund faculty training in AI theory and applications, developing an AI lab within the NSU School of Science, Technology, Engineering and Math (STEM), integrating additional AI-related courses into existing curriculum, assessing the ways which NSU can benefit from state-led AI initiatives as well as conducting AI-focused seminars for student organizations upon request.

The members of the AI Club have shown their genuine interest toward the activities of the organization, and their motivation has spread across NSU.

“This started with a few self-motivated faculty members in the School of STEM, but we have been shown good support by the dean, provost, now president, so I’m hoping something very good will come out of all these activities and involvement,” Sapkota said.

The AI Club currently has 13 official members. However, the AI Club and its members host workshops and seminars on topics regarding AI, which are open for students and any non-members to attend.

“Starting this semester, we are expanding our outreach to include all interested faculty and students. Each event will be announced through the messenger service to ensure broad awareness and participation,” Sapkota said.

Several faculty members of the AI Club have already hosted presentations on their prospective projects using AI, in order to educate the student body. Many of these faculty support an initiative to incorporate formal education on AI at NSU. Sapkota hopes that by incorporating formal education about AI, faculty and staff of institutions can create a clear use for AI in the classroom environment.

“Barring them from learning AI, that shouldn’t be the responsibility of academic institutions. We should teach because society is moving that way, and we are responsible for creating and producing individuals that better fit in that society,” Sapkota said.

Damien Tristant, a member of the AI Club and assistant professor of physics, explained how the organization allows for educators to evaluate the multidisciplinary use of AI in education.

“All of these teachers are from different fields, so they may have different points of views — observations — of AI,” Tristant said. “So in my case, I’m a physicist, so I know how I will use AI, but someone like Dr. Micheler, who is teaching English: how can he use it? So maybe he will show us another vision of AI.”

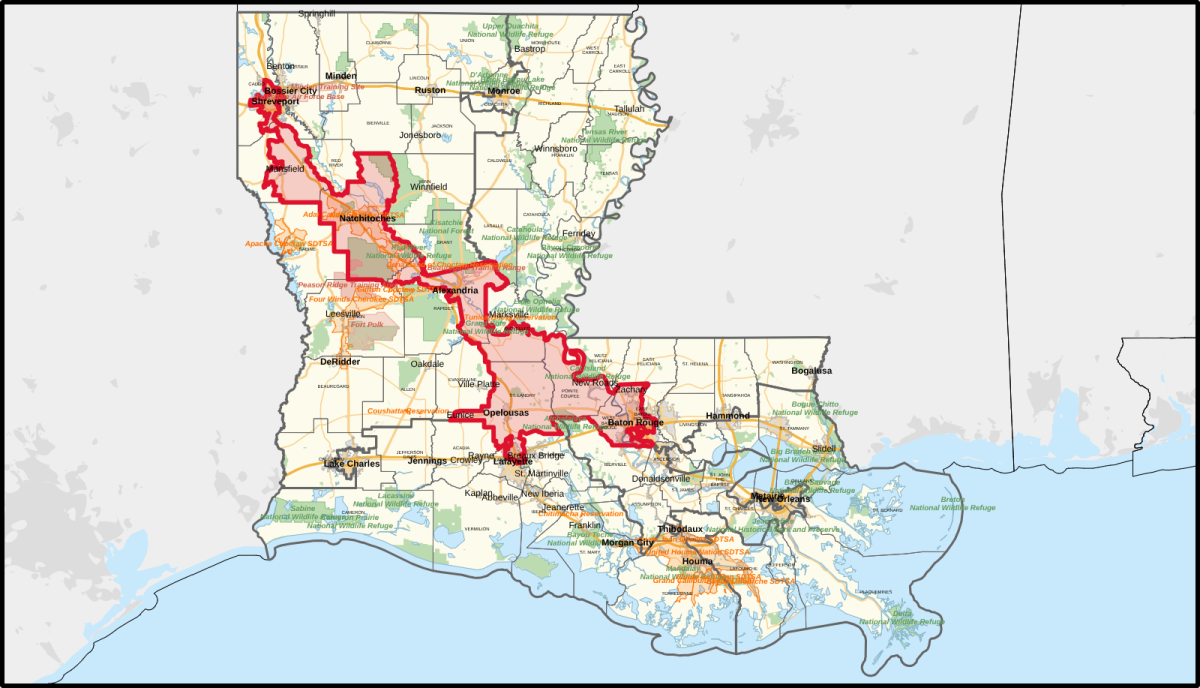

Tristant is currently working on a project which will use AI to identify how Fournet Hall reacts with outside factors such as temperature and light.

“So first, we need to understand how the building reacts with the outside conditions, the temperature, the pressure, all of that, and we will map out the entire building,” Tristant said. “Then, we will use all the data we have to see if there is a correlation between them and how we can improve the building. For that, we use AI.”

This project is within Tristant’s work with the Fédération Française du Bâtiment (FFB) in collaboration with the National Center for Preservation Technology and Training (NCPTT). The NCPTT works to identify ways to use advancements in technology to preserve history. This project is specifically targeting the preservation of historic buildings, such as Fournet Hall, by identifying their response to the environmental conditions of Louisiana.

Within his use of AI for this project, Tristant ensures he double checks any resources that AI provides. He prefers to use AI to complete menial tasks in order to save time for himself to complete larger tasks.

“I want to know where the source comes from. I am not going to just copy and paste something, but I’m gonna use that as an idea, and then, after, I will do my own research,” Tristant said.

With this, Tristant does not deny his students the ability to utilize AI, but he feels they should not rely on AI without understanding where the information comes from.

“The AI is here to help you, but you need to understand what you are doing. The AI is not here to make you do your job.” Tristant said. Otherwise, we will not be working with the humans anymore, and we’ll just be working with the machines, which we don’t want. We want to make sure that humans control the machine and not the opposite.”

Tristant explained the importance of educating students on how to properly use AI.

“It’s better to teach them how to use AI than to tell them not to use it. So, in my case, I encourage them to use AI,” Tristant said. “However, I want to make sure that they know the framework, the limitations, and when they can use it and when they cannot use it.”

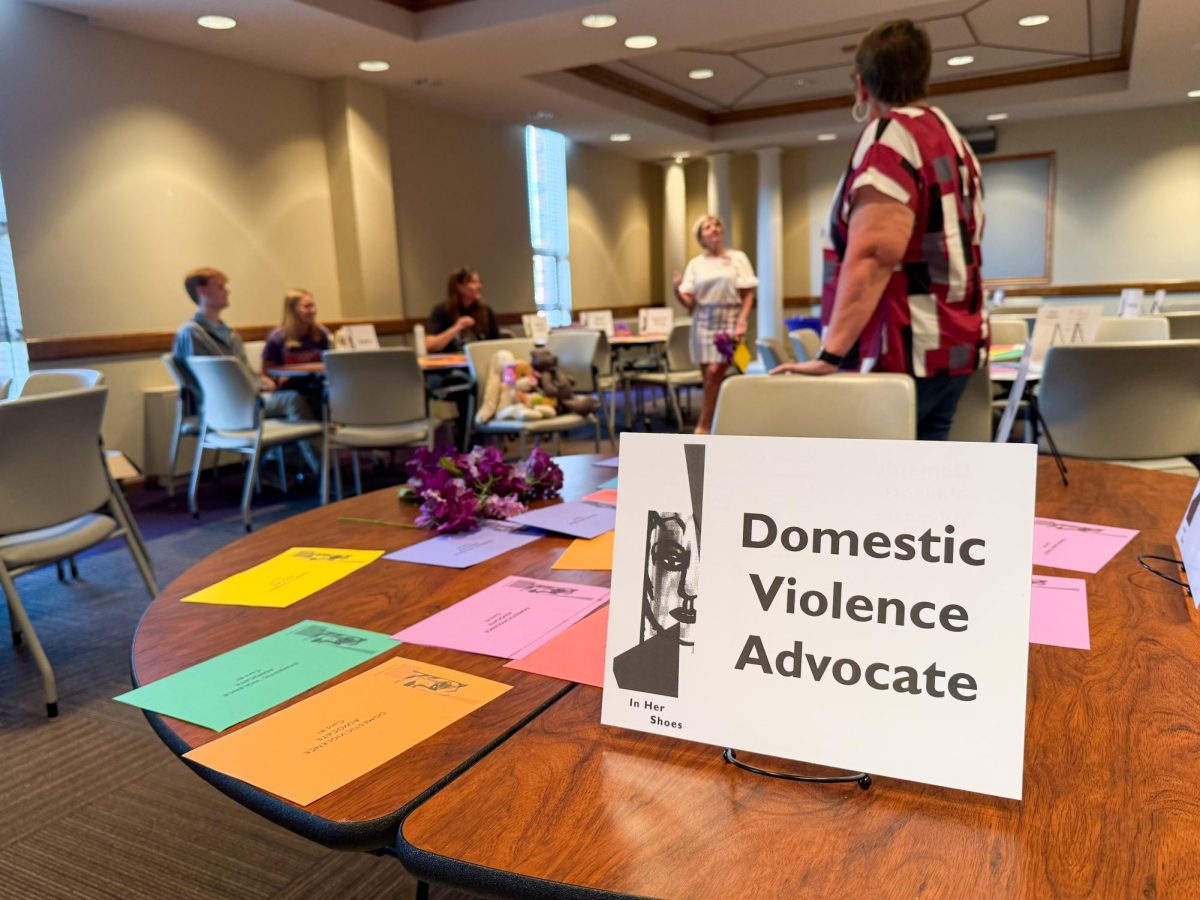

James Mischler, director of the Institutional Review Board, has begun implementing formal frameworks and limitations similar to those that Tristant described. With the IRB, Mischler began this work in summer 2024 — just after the release of Chat GPT 3 — after realizing a need for policies on data privacy and resource accuracy.

“As the institutional review board, we are charged by the federal government to keep human subjects safe from harm, and one of those harms could be privacy harms,” Mischler said.

The policies that Mischler has developed are specifically geared toward the ethical use of AI in research studies and focus on four key ethical issues. Data privacy was the first issue Mischler wanted to address.

“In a research study, often you’re collecting with human beings, you’re collecting data from them, and often it’s private data, and our concern was whether or not that’s okay. As in, could the AI system keep it private,” Mischler said.

Next, his research policy addresses transparency. The policy refers to the transparency, accuracy and proper documentation of a source. Since AI has the ability to generate its own data, the policy ensures that research provided is accurate and credible. This point goes along with the third issue addressed — accountability — which is to accurately and honestly credit your information. Therefore, if any research was from an AI channel, the author must address it as so.

The policy statement then goes on to address the final issue: authorship. Under copyright law, papers written using AI are not copyrightable. Therefore, AI cannot be listed as an author.

Mischler feels that these issues ultimately work together and interchangeably to ensure responsible use of AI in research.

“The whole point of our research policy statement, was a statement boiled down to the page and a half that says to use AI responsibly,” Mischler said. “It sounds kind of like, you know, ‘drink responsibly,’ but that’s really the crux of the issue is that — the IRB — we’re looking at the ethical use of research and making sure that it’s used in a way that helps people rather than harms people.”

This research policy statement currently applies to the IRB and NSU’s Department of English, Languages and Cultural Studies.

For the time being, any policies regarding AI within NSU syllabi can be individualized per department. The English department syllabi, as of Fall 2023, outlines that any use of AI without the prospective faculty member’s permission is considered plagiarism.

“The AI system is a helper, an assistant and provides ideas, but in the end the student has to write what they think is important and so they shouldn’t let the AI dictate to them what they should or should not be writing,” Mischler said.

As for a policy that crosses departments, Mischler believes it should be flexible to apply to the multitude of fields at NSU.

“I really think some sort of underlying philosophical principles or practical principles about what responsible use of AI means would be great,” Mischler said. “It would also allow flexibility for people to find creative ways to use AI without doing it in a way that, even inadvertently, is irresponsible.”

Overall, any policy regarding use of AI must include human oversight. “I think the idea that’s starting to come out of all this is that there has to be human oversight of an AI system. The human being must always be the one making the final decision,” Mischler said.

For the time being, there are no official federal, state or university policies. However, Megan Lowe, director of university libraries, has helped to write an academic integrity policy on AI for NSU to apply across departments. A key focus for Lowe in the writing process was to leave space for faculty to add their own statements.

“When I was helping author that policy, we tried to make it to where individual faculty or individual departments had the freedom to write policies for individual courses or individual programs that reflected their needs and concerns around the use of generative AI in their specific disciplines,” Lowe said.

This policy was approved by the faculty senate during the Fall 2024 semester and has been integrated into the faculty and student handbooks in Fall 2025.

“Now, this is probably going to be the only campus-wide policy related to generative AI because there’s not going to be a universal policy — or there can’t be, in my opinion,” Lowe said. “I don’t believe that there can be an institutional level policy that could be articulated that would allow for all the possible uses of gen. AI.”

While universal policies may be difficult due to AI’s broad nature, Lowe is working on a universal, self-guided course on artificial intelligence literacy.

“We’re on the front lines of this thing, learning right along with y’all, so we’re trying to develop AI literacy programs to help our students,” Lowe said.

This course would be available across the University of Louisiana System and include topics such as the history and development of generative AI, ethics, environmental implications, human labor impacts and more. This course is currently under discussion within the Provost’s office and is estimated to publish by March 2025.

As AI advances, higher education must advance with it. Through advancement in research, policy and understanding of AI, the opportunity that comes with it can be harnessed.

“AI is applicable in every field whether it is science, whether it is art, whether it is in business, it is everywhere,” Sapkota said. “In your cell phone, you have the entire knowledge of human history, everything so far we have found, you have access to that. Knowledge is within our race; all we need to know is how to harness it.”

To read about Tristant’s project with the NCPTT on Fournet Hall click here.